Over the course of the last week I’ve reread all of John Siracusa’s OS X reviews for Ars Technica, starting with OS X Developer Preview 2 and finishing with OS X Mavericks. This page handily lists all of the reviews (earlier reviews linked back, but this pattern ended at 10.5).

My motivation was to learn a little more about the history of OS X, as I’ve only been using Macs since Snow Leopard. These reviews are a great place to get a sense of the history of the OS because they were written at the time of each version’s release, rather than having been updated regularly with new information, like the Wikipedia articles.

The pattern of the early reviews (the first seven are all about 10.0 and its betas) was focussing on the differences between OS X, raw UNIX and Mac OS 9. Whilst 10.0 is very different there are striking similarities to the OS X I use everyday: it still had Aqua, HFS+, Cocoa, etc. A lot of the key differences, for Siracusa at least, were the user interface and experience, the Finder and the file system (there was also a font kerning issue in the Terminal for several versions that was his pet peeve).

As OS X progressed, the reviews did too. Up until 10.4 Tiger, the reviews often discussed the performance changes from the previous version (especially the fact that they got faster on the same hardware), whereas after this performance improvements were much smaller (Siracusa, whilst expressing admiration, did point out that the cynic’s response is that 10.0 was so slow, which is why there was so much room for performance improvements).

Most early reviews also advised the reader on whether or not they should upgrade, especially given that each new release was a $129 upgrade. Later reviews do not even consider this (at one point Siracusa even considered it a compulsory ‘Mac tax’) but with Mavericks being free, price is given a final consideration in its review. Modern reviews seem to have this pattern:

- Introduction and summary of expectations from the last review

- The new features

- Performance changes, if any

- Kernel changes and enhancements

- New technologies and APIs, if any

- File system rant (which, having read the earlier reviews and got some context, now seems justified)

- Grab bag (summary of minor apps and changes)

- Conclusion and looking ahead to the future

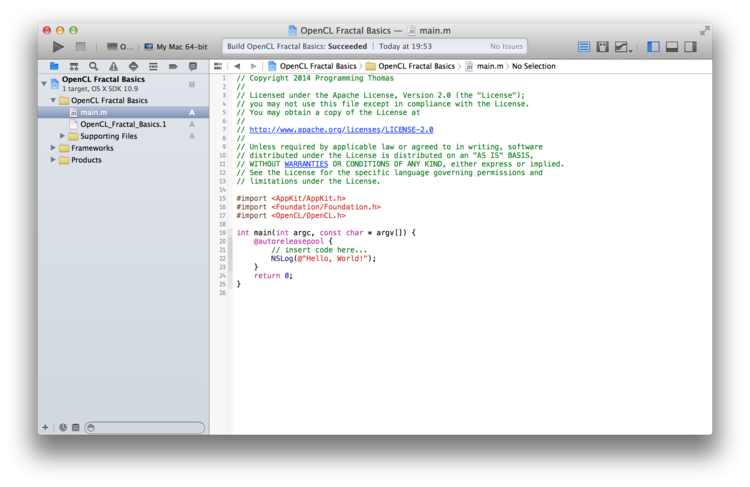

The evolution of OS X is fascinating from a user and developer perspective. As new developer technologies, like Quartz 2D Extreme/QuartzGL, Core Image/Audio/Video, OpenCL, Objective-C 2.0, Blocks, GCD and ARC, emerged each was carefully explained. Whilst I am familiar with these technologies today it was awesome to see when, why and how they were released. Transitions in other areas (32-bit to 64-bit, PowerPC to Intel, ‘lickable’ Aqua UI to a more flat UI) was also interesting to read about, especially given a modern perspective.

Reading the reviews in 2014 has been fun, especially considering that some of them are almost 15 years old. Often Siracusa made predictions of varying accuracy:

- Tags were predicted in the Tiger review

- High DPI/retina displays were predicted when support was first added in OS X (although retina displays weren’t considered until the Lion review)

- HFS+ would be replaced

- Macs with 96GB of RAM (this was in the Snow Leopard review, but the current Mac Pro can be configured to ship with up to 64GB and supposedly supports up to 128GB)

Another interesting series of articles was the ‘Avoiding Copland 2010’ series which were written in 2005 and discussed the various enhancements that Apple would have to make to Objective-C in order for it to remain competitive with other high level languages. I also recommend listening to the associated 2011 episode of Hypercritcal, A Dark Age of Objective-C. With the recent debate over whether Objective-C/Cocoa should be replaced (they shouldn’t), these articles are surprisingly relevant.

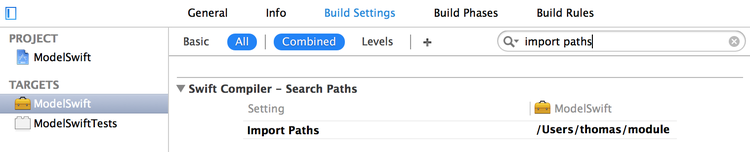

I highly recommend reading the OS X reviews if you’re a developer and haven’t read them before, or are just interested in the history of the OS. I ended up reading all of the reviews in Safari’s reading mode because it was able to take the multi-page reviews and stick it in one page (albeit with some images missing) - in the past I had used Instapaper for this but it occasionally seems to miss multi-page articles.